We are all working towards creating an environment of trust where players would never even consider cheating and the thought that someone may have received assistance wouldn’t cross our minds.

Until that time comes, what do you do with this report?

Disclaimers

- Please use these statistics as a Guide

- Build expectations over time, use multiple events to give you your own benchmarks

- My comments are general in nature and should not to be applied to specific players or situations

- Use your own intuition and knowledge of a player

- Have a default setting of trust

- Forgive easily

- Remember that you can never be “certain” that a player had assistance, it is theoretically possible to roll a die and come up with a six 100 times in a row. Learn to live in the probabalistic realm

What is and isn’t suspicious?

- More data is better, assessments on

- It gets progressively more difficult to identify computer assistance as players’ natural skill level increases

- Our anlaysis is ruidmentary and unreliable for players near to, or over, 2000 rating

- Faster time control events should see worse performance

The Simplest Interpretation

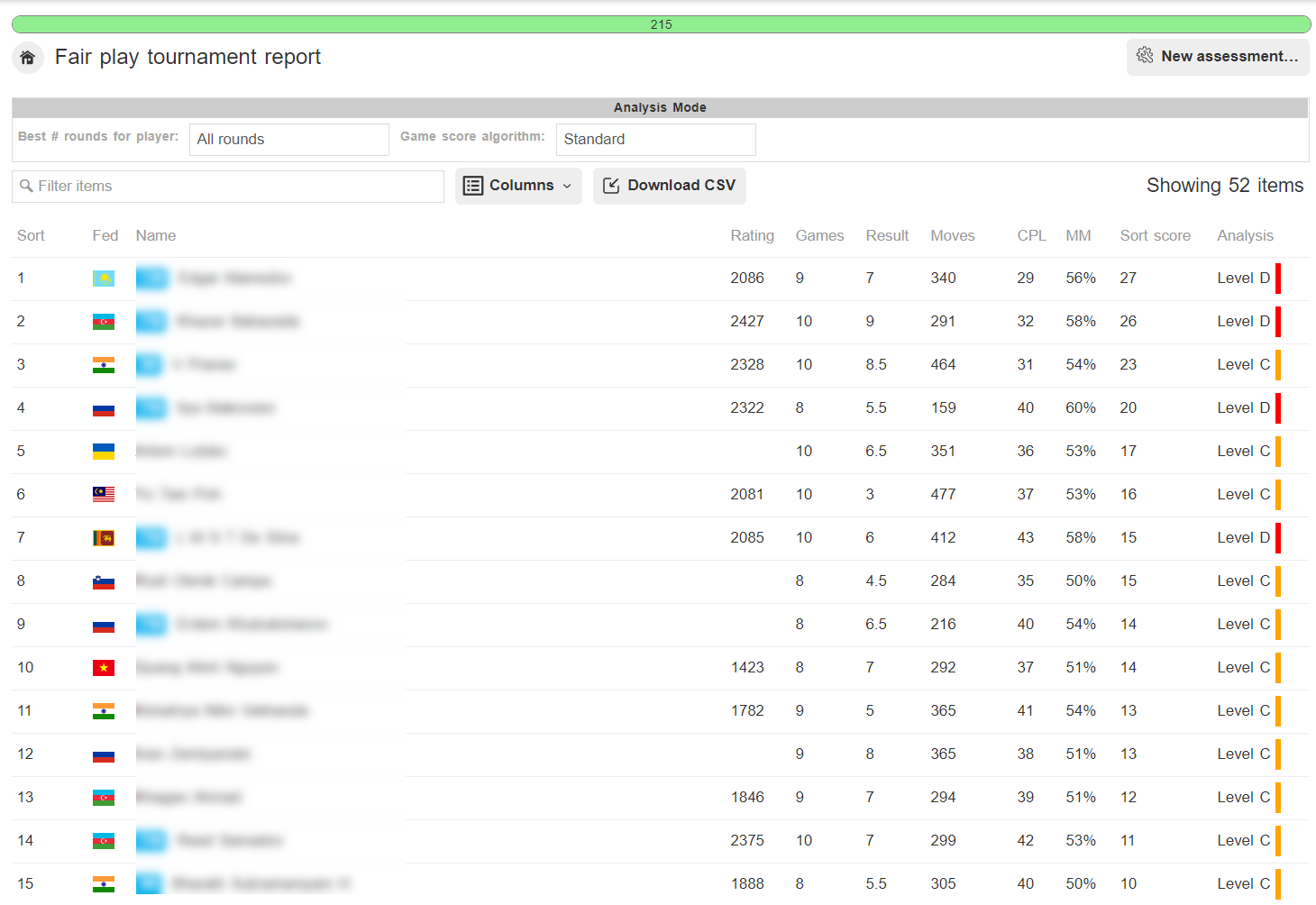

We sort the table from Most to Least likely to have had assistance. Keep your eyes on the top of the table.

Look at the Sort score Column:

- Anything >0 is worth glancing at

- >10 is likely an average club player on a good day

- >20 is likely a 2000+ rated player

- >40 is likely a GM-standard player having a good day

Tornelo chooses the Level (depth) of analysis based on its algorith, always trying to prove innocence. If more analysis is required, deeper analysis is automatically triggered. However, you can also trigger the deeper analysis manually from Level A up to Level D for an Event or a Player or up to Level E for a single game.

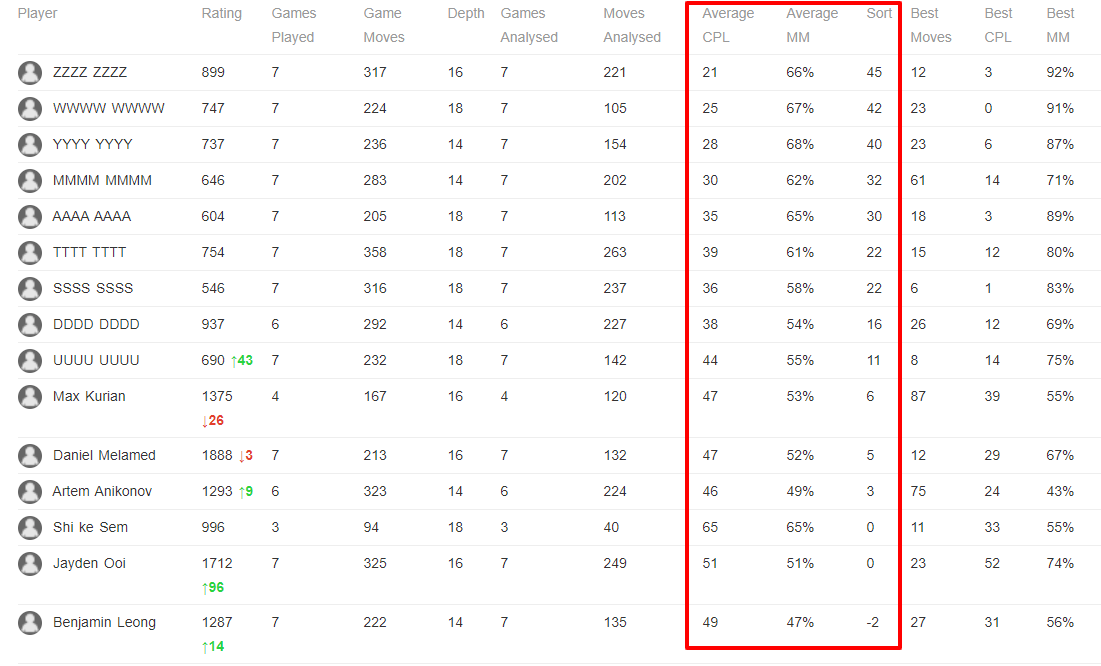

Benchmarks: Centi-Pawn Loss (CPL)

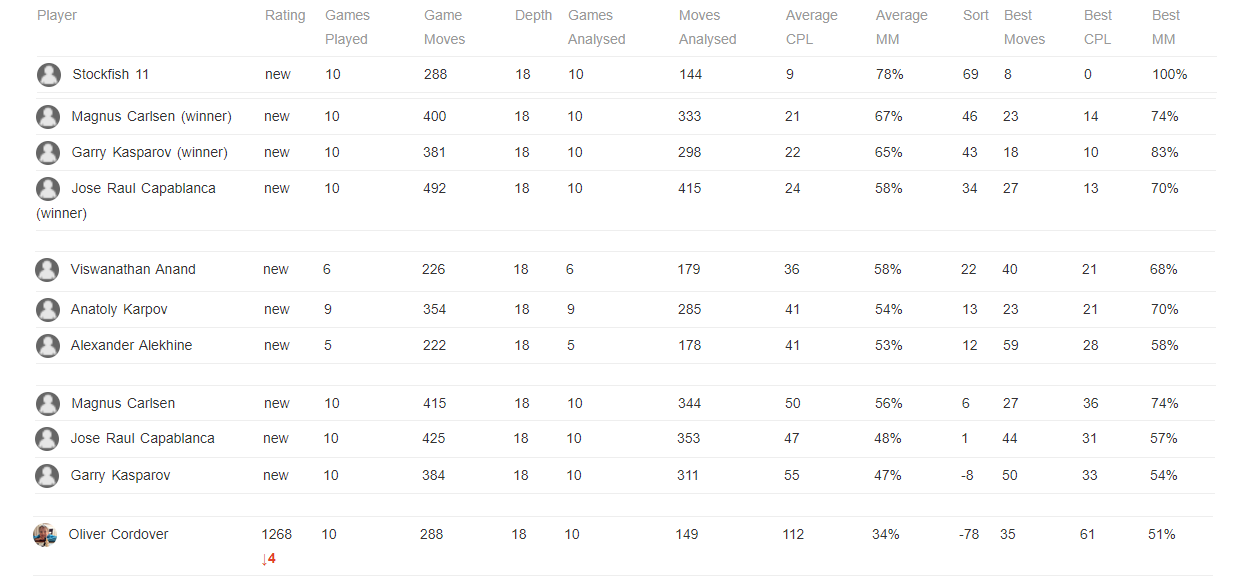

Using a random selection of 10 wins and 10 losses while World Champion:

- Magnus Carlsen CPL = 23

- Gary Kasparov CPL = 24

- Jose Raul Capablanca CPL = 22

Stockfish 11 achieves a CPL = 4.4

- Australian Junior Championships overall event performance is CPL = ~64

Benchmarks: Move Match % (MM)

Using a random selection of 10 wins and 10 losses while World Champion:

- Magnus Carlsen MM = 58.1%

- Gary Kasparov MM = 50.4%

- Jose Raul Capablanca MM = 56.7%

Stockfish 11 will match itself around 75% of the time

- Long-term classical tournament performance of a player rated >2700 (during the years 2006-2009) MM = 56% move match

- Individual events may be higher; Anton Smirnov rated ~2200+ (2012 Australian Junior Championships) MM = 59.4%

What about just one game?

The more data the better. Looking at just one game is really dangerous, however here are some tips for when to be suspicious:

Move Match threshholds

- 2000+ rated players > 60%

- Club players >55%

- Scholastic players >50%

Centi-Pawn Loss threshholds

- 2000+ rated players

- Club players

- Scholastic players

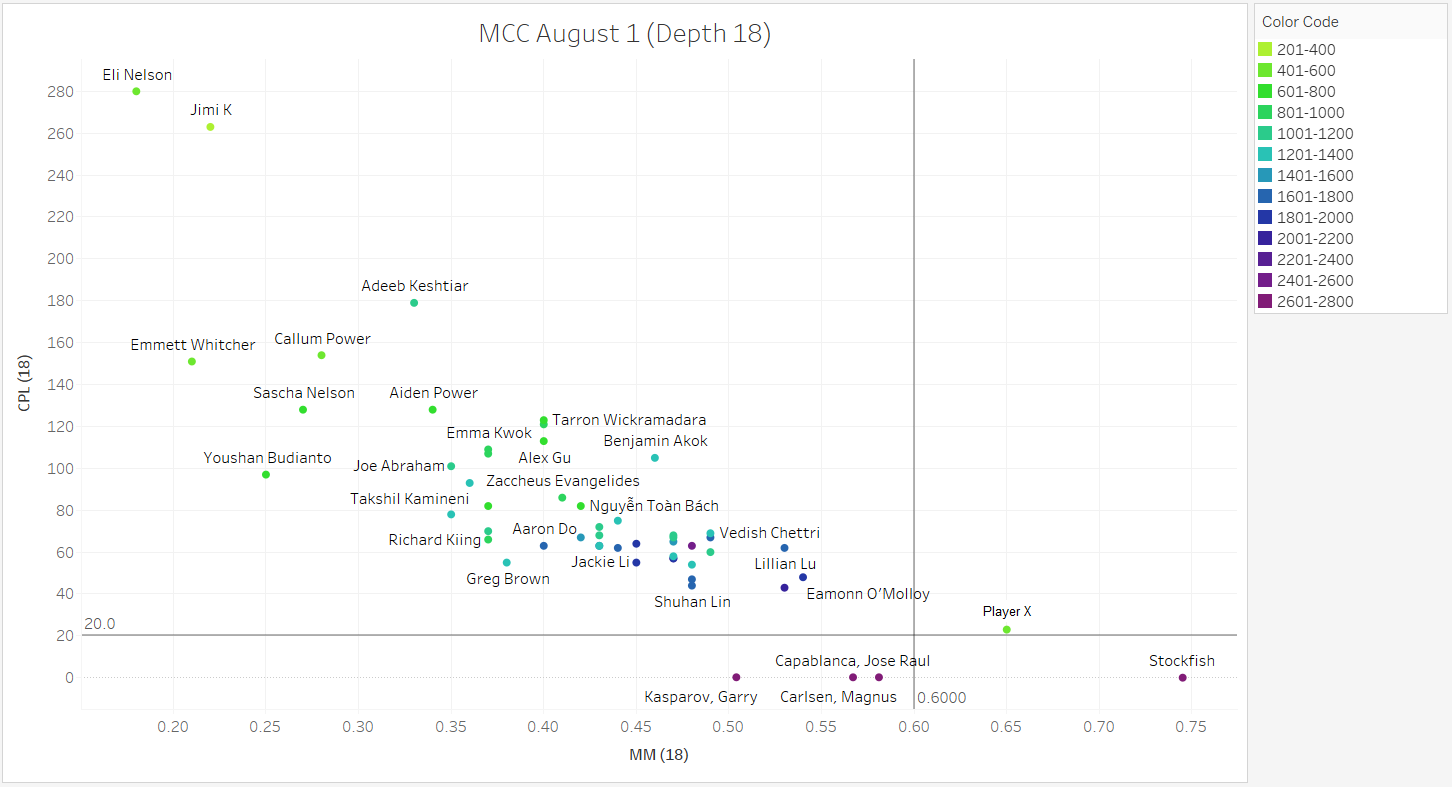

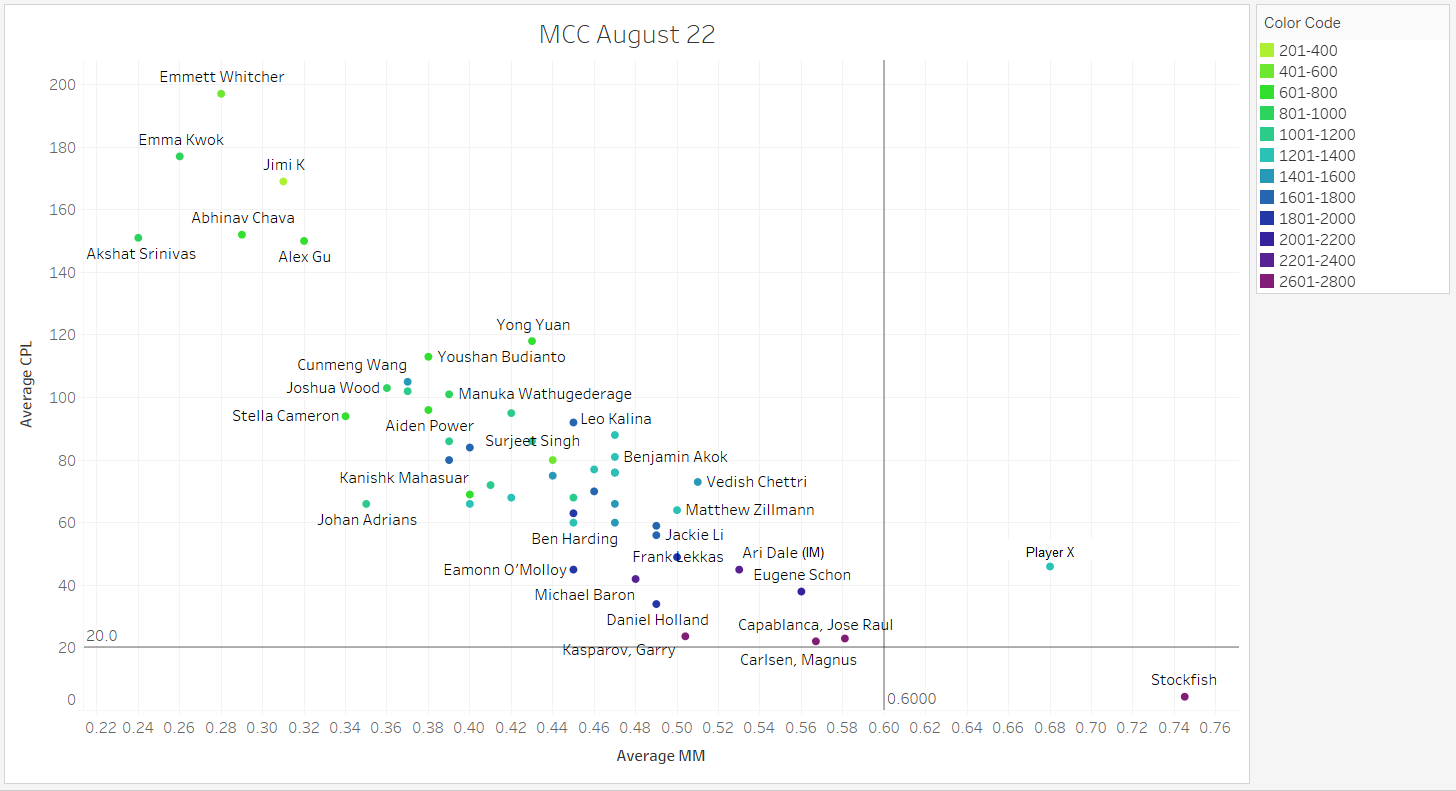

Can you pick the cheater?

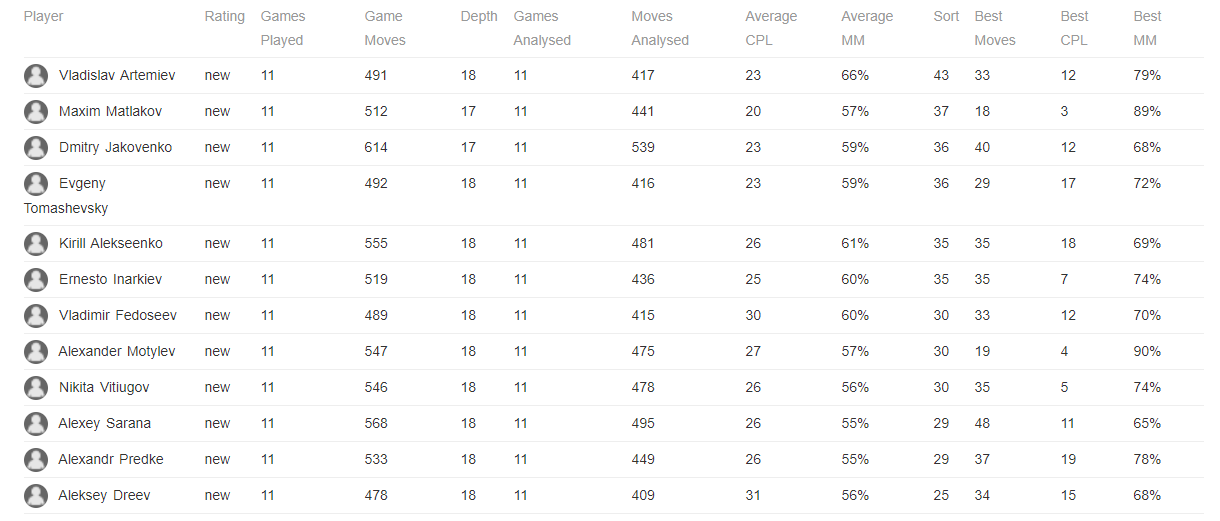

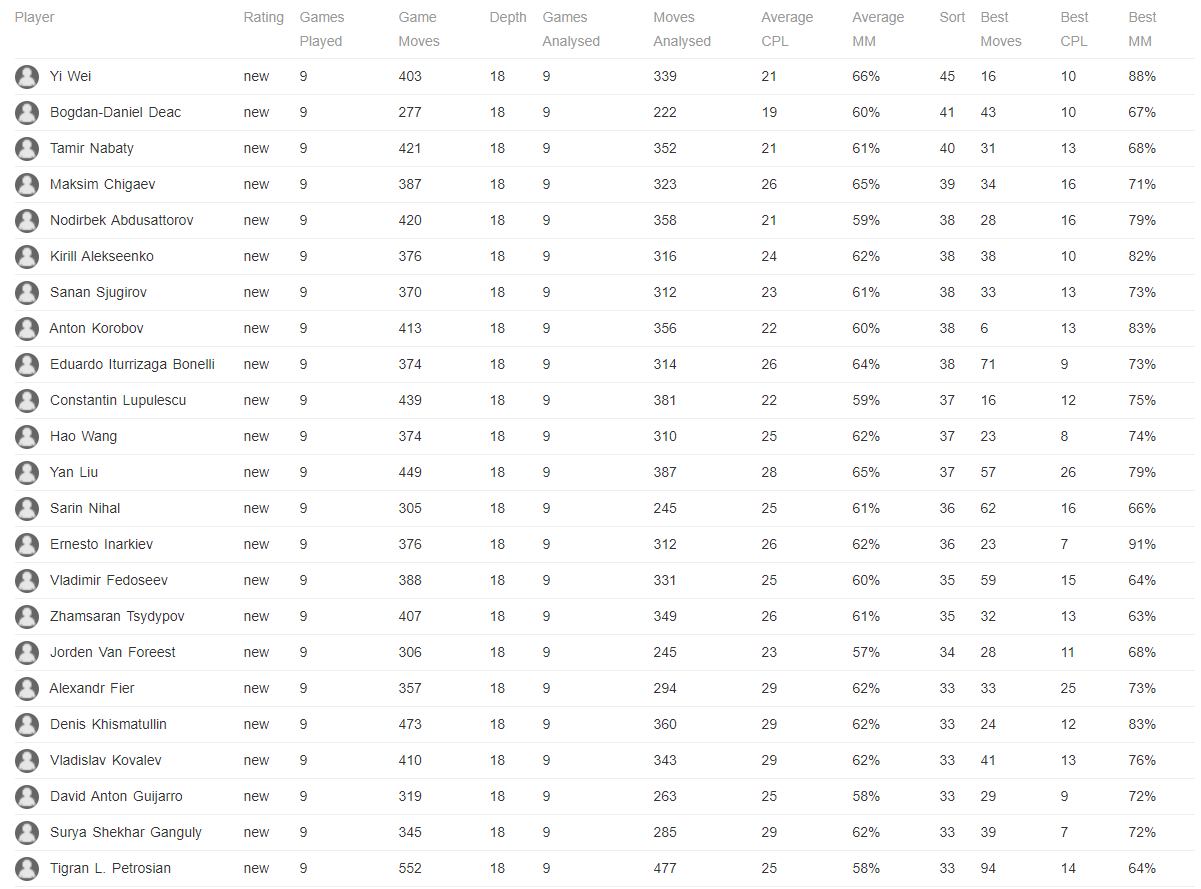

A graphical representation of your Fair Play report is coming soon. I’ll start you off with a couple of easy examples – one player in each of the two following events admitted to having received assistance. Can you pick who?

In closing I’ll leave you with this link…..

Why dealing with cheating in chess needs human input.

I highly recommend you read the blog post and explore the entire visualisation before you make a decision about what punishments you apply to players in your community that do cheat.

Our statistical analysis is based on work by the world’s leading expert at detecting assistance in chess games, Dr Kenneth Regan from University of Buffalo http://www.buffalo.edu/news/experts/ken-regan-faculty-expert-chess.html.

Despite loving Ken’s work, we have no affilliation to Dr Regan and all our analysis is our own – Kenneth Regan’s work is far more sophisticated and provides far greater fidelity than our results. If you are running an event with players over 2000 rating, and you are concerned about chess cheating, I highly recommend you reach out to Dr Regan.

Addendum

Here are some examples of really strong Over The Board events from 2019, played with no assistance provided.

The first one is from the 2019 Russian Championships Superfinals (Tornelo page).

The second is the 2019 Aeroflot Open (Tornelo page)

And here are the benchmark games from some World Champions. The results at the top are 10 games which they Won, the ones at the bottom are from 10 games when they Lost.

What to say to parents or teachers?

Players online, especially juniors, can be tempted to break the rules by getting help from a computer engine. We undertake a correlation analysis of all games played in our events in order to protect players with exceptional performances from accusations of unfair play.

Our screening indicates that players X and Y are both highly likely to have received computer assistance during their game.